Is GPU Computing Right for Your R&D Simulation Needs?

Sep 11, 2024

Is GPU Computing Right for Your R&D Simulation Needs?

In the mid-90s, videogames on the computer were starting to boom. 3D graphics were the cutting-edge, and the normal Gateway desktops couldn’t handle the visual demands without a dedicated “video card.” Video cards were the beginning of what we now call GPUs: Graphical Processing Units. At the end of the day, it was a dedicated piece of hardware to handle the extra computational load the CPU core(s) could not. At the time, GPUs were used for videogame rendering and still are today. However, GPUs’ usage has expanded into the high-performance computing (HPC) domain, resulting in faster engineering simulations.

Ansys has integrated GPU solver capability into Fluent, their CFD simulation tool, to great effect. Instead of relying on tens or hundreds of CPU cores to achieve quicker results, what if one or two GPUs could provide several times the computing power at a fraction of the cost of the equivalent CPU core count?

Not so fast! What about CPUs?

For the past few decades, throwing more CPU cores at simulation problems was the solution. With the advent of HPC, users were able to acquire tens or even hundreds of CPU cores that could be used in parallel to crunch the numbers of a simulation. The running assumption was as follows: more cores, more speed, faster results. And while that’s fantastic, having hundreds of CPU cores can get expensive in a hurry.

Related:Simulation Key to Future Engineering

Having this level of computing power is typically something newer simulation users don’t have access to until their organization is more established. To give an idea, the average 32 CPU core workstation can cost around $10,000-$14,000, while a 128 CPU core cluster (a server) can cost upwards of $50,000. You can see there’s a sizable gap in dollars between those two extremes. This is where leveraging GPUs can come in handy.

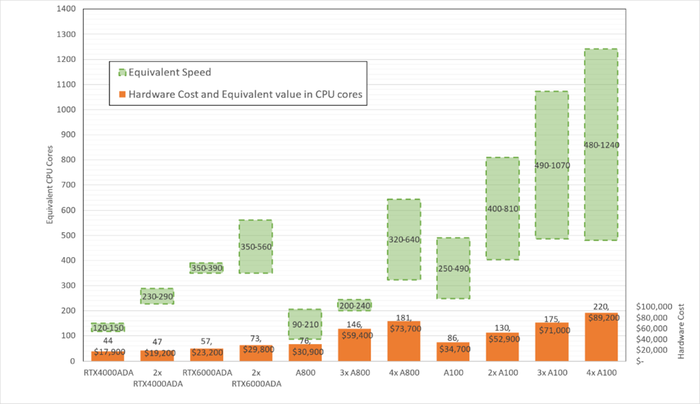

Ansys has integrated its Native GPU solver into Fluent, the CFD Solver. Prior to this innovation, Ansys Fluent leveraged CPU cores to solve simulations faster. Now, Fluent can offload those calculations directly to the GPU, and the resulting speed increase is tremendous. The Rand Sim team recently ran a GPU benchmarking study using Ansys Fluent and an assortment of GPUs to understand what that discreet benefit is (we call it “equivalent CPU cores”). When compared to a standard 128 CPU core cluster (our baseline case), utilizing a GPU to solve CFD simulations is orders of magnitude faster:

Chart courtesy of RandSim

In the chart above, each GPU (x-axis) has two metrics: it’s equivalent CPU cores (in green, y-axis) and the hardware cost with equivalent value in CPU cores (in orange, y-axis). The equivalent speed in CPU cores shown are a range because their “effectivity” depends largely on the size of the simulation model (element count), the type of solver used, and the physics being simulated. However, the biggest take-home point of the chart above is most GPUs we tested are several times faster than our 120 CPU core workstation benchmark at an extremely compelling price point.

Related:Why Simulation Is a Key Pillar of Industry 4.0

For reference, our baseline 120 CPU core workstation was approximately valued at $52,000. For about half that price, you can purchase an RTX6000 ADA-powered workstation and have the equivalent of 350-390 CPU cores worth of performance. In simulation terms, that’s a lot of power.

So, what does this mean from a broader R&D or company-wide perspective?

More capital: With less capital being spent on hardware, more capital can flow into other areas of the business. Maybe that could mean improving your simulation team’s resources: additional licensing, headcount, or training opportunities. All of which can further bolster capability, quality improvements, and reduced warranty. These are cascading benefits that can directly impact market share, brand perception, and revenue. Alternatively, you could further augment product design capabilities, physical testing equipment, or add additional product lines with the year-over-year savings.

More time: The obvious next best thing. Shorter simulations facilitate a variety of design confidence improvements including more time to optimize designs, increased first-time pass in the physical test chamber, and less design rework. This translates directly into shorter project timelines because risk is mitigated. The product that goes to market is far more robust because sufficient time is spent fine tuning the design. And, with projects having less risk associated with them, overall timelines can be shorter, leading to quicker launch schedules. Opening the door for…

More innovation: If there’s more capital and more time to do everything else, that frees up an organization to ask the more strategic and forward-thinking questions: What else can we do? This is where innovation can truly thrive. The advanced technology and high-risk/return projects are often funded last. But what if those projects have tremendous potential to reshape the face of the business and alter its market share trajectory in the workplace? What if you had the ability to fund and staff those projects? Becoming a marketplace disruptor can cement your company’s future. Nothing ventured, nothing gained as the saying goes.

So, the question stands, are GPUs the best path for your HPC needs? The answer to that question is “Can your business afford not to use them?” The benefits they offer from a speed, time, and cost perspective are too large to ignore.