Understanding Errors, Defects, & Bugs in Software Development

Oct 17, 2024

Understanding Errors, Defects, & Bugs in Software Development

In the world of embedded software development, defects can cripple projects, delay releases, and ultimately lead to failures that affect everything from consumer electronics to mission-critical aerospace systems. On average, embedded developers spend 20 to 40% of their development cycles debugging their software! Yes, that’s 2.5 to 5 months every single year per developer!

I know that sounds high, but every survey I’ve seen, team I’ve talked to, and engineer attending a talk of mine whom I’ve queried has time and again shown me that those numbers are accurate. (And yes, I often see folks who spend 80%!)

If you want to decrease development costs and time to market while improving the robustness of your systems, it’s critical to understand how defects arise and how they can be minimized.

This post will delve into a few critical concepts that often confuse developers and managers: the differences between errors, defects, and bugs. I'll also offer actionable insights on jump-starting your code development processes to minimize their impact.

What are errors, defects, and bugs?

In software development, particularly in embedded systems, developers use the terms “errors,” “defects,” and “bugs” somewhat interchangeably, but they are distinct concepts.

Related:5 Effective Strategies You Should Know to Avoid Debugging

Let's break them down and understand what each is:

-

Errors. An error is a human mistake that occurs when a developer writes or interprets the software design. These errors are typically due to misunderstanding requirements or incorrectly implementing logic. Errors are introduced at the coding stage but can manifest in various forms, including logic flaws or incorrect assumptions. Errors can occur anywhere in the development cycle, including requirements, propagating errors through various development phases.

-

Defects. Defects, on the other hand, are the result of unanticipated interactions or behaviors that occur when the software is executed. These often stem from mistakes in design or unexpected conditions that weren’t adequately accounted for. A defect is an unintended consequence or oversight that makes the software behave differently from its intended design.

-

Bugs. Bugs are a more colloquial term and are often seen as a subset of defects, primarily when referring to issues discovered in a live environment. In other words, bugs are defects that have slipped past the testing phase and made their way into the final product. These are often more critical and require immediate attention. However, I often see developers refer to bugs as any error or defect that occurs anywhere in the development cycle, including before the software reaches production.

Related:3 Tips to Automating Tests for Embedded Systems

You can easily see how each of these terms might be used interchangeably. Still, the specific cases in which we use this language dramatically affect how we view and manage issues during development, particularly our mindset regarding developer responsibility.

Responsibility of the developer

We love to use the word “bug,” which implies that whatever happens is out of our control and not our fault. Bugs provide vivid imagery to our minds of something crawling into our software and breaking things. Software developers fight against some unseen adversaries to make their code work. The developer is the hero, not the problem. Unfortunately, this mindset can make developers reactive instead of proactive, resulting in more debugging time!

It's easy to think that a developer's sole job is to identify and fix bugs as they appear. In fact, that can lead us to the idea that we should knock out code as fast as possible and then go back later to fumigate our code. However, the developer's primary responsibility is not merely to remove bugs but to “prevent errors and defects” from creeping into the codebase in the first place!

This is where a disciplined approach to software development is invaluable. By following best practices, adopting modern tools, and employing rigorous testing, developers can prevent most defects before they manifest as bugs. The result can be a faster time to market, happier customers, and dollars saved on development costs.

It’s often tempting for developers to try to rely strictly on testing. However, as Edsger W. Dijkstra famously said:

"Program testing can be used to show the presence of bugs, but never to show their absence!"

The message is clear: Testing alone cannot guarantee bug-free software. However, preventing errors and managing defects throughout development can lead to more robust, higher-quality code.

The Defect Management Pyramid: Debugging, development, & design

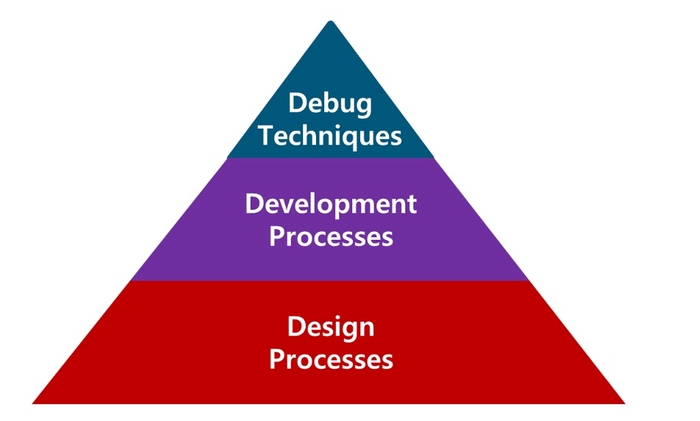

The Defect Management Pyramid offers a helpful framework for categorizing the key areas developers should focus on to prevent and manage defects effectively. The pyramid breaks down into three layers: Debugging, Development, and Design as shown in the diagram below:

JACOB BENINGO

At the pyramid's base lies the design process—the most fundamental and crucial aspect of minimizing defects. Poor design decisions create a ripple effect, leading to errors and defects. Developers must adopt a design-first mindset to anticipate edge cases, handle error conditions, and create flexible, scalable systems.

Before you write a single line of code, several activities should be done to ensure that you can minimize defects and errors. First, develop a software architecture. Software architecture is the roadmap developers use to write their code. Without the roadmap, they’ll get lost quickly!

Second, to ensure that your system meets the levels of robustness and reliability that you need, consider creating a Design Failure Mode and Effects Analysis (DFMEA), a Functional Hazard Analysis (FHA), or similar. These help you to think through the potential failure modes of your software and system and put in place mitigation strategies. It can also help clarify the code quality level you need, the processes, and feedback to the software architect.

The middle layer of the pyramid involves setting up development processes that help catch defects early. These processes can significantly minimize the injection of defects and errors by enforcing structure and consistency throughout the codebase.

Teams can leverage many modern strategies in this part of the pyramid, such as Test-Driven Development to ensure a test for all production code and code reviews. You can also leverage techniques like:

-

DevOps and CI/CD pipelines

-

Metric and Code Quality Analysis

-

Simulation

There are plenty more but those are the core techniques that will your development processes the biggest bang for your buck.

Debugging techniques represent the topmost layer of the pyramid and are the first line of defense in identifying defects. Techniques like breakpoints, printf debugging, asserts, and both application and instruction tracing are invaluable for catching defects during development. However, debugging should be seen as a tool for fine-tuning rather than a crutch.

Developers tend to default to debugging to resolve defects and errors. That is the most inefficient mechanism which is why it’s at the top. You should use debugging as sparingly as possible, not rely on it as your primary tool!

Conclusion

Errors, defects, and bugs are inevitable, but their impact can be significantly minimized by adopting a structured approach to development. Embedded software teams can produce higher-quality, more reliable products by preventing errors at the design and development stages and employing effective debugging techniques only as needed.

Your homework is to review your design and development processes. Where are you letting bugs through?

Once you’ve done that, employ some of the techniques and examples discussed in this post to help improve your development cycle. Afterall, wouldn’t you like to have 2.5 to 5 months back to focus on other, more exciting things?